Net Heads

Huge numbers of brain cells may navigate small worlds

About 40 years ago, the late psychologist Stanley Milgram tapped into the commonsense notion that “it’s a small world.” Milgram asked 60 people to send a folder to a certain individual whom none of them knew. Participants were given a little information about the target person and asked to mail the folder to a friend or acquaintance who, in their view, was more likely to know the stranger than they were. Each recipient of the folder was asked to do the same, until the material reached its destination.

Only one-quarter of the chains were completed. In those cases, though, the folder passed through an average of six intermediaries. Milgram’s project inspired the phrase “six degrees of separation” and led to, for example, people calculating movie actors’ working relationships to actor Kevin Bacon.

The small-world phenomenon got a big boost in 1998. Steven Strogatz of Cornell University and Duncan Watts of New York University used mathematical simulations to show that all sorts of large networks can be traversed in a small number of steps. Strogatz and Watts demonstrated how this effect applies to the more than 4,300 elements of the electric-power grid in the western United States and to the collaborative relationships of more than 225,000 professional actors.

Strogatz and Watts also demonstrated the relevance of the small-world idea to the array of 282 brain cells in worms called nematodes.

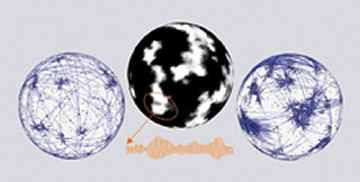

Small-world networks have a distinctive structure: There’s a cluster of nodes, each connected to its immediate neighbors, with a few that connect to distant nodes. This structure enhances the power and efficiency of these systems, Strogatz and Watts argued.

More and more neuroscientists agree. Motivated by Milgram and his mathematical progeny, researchers are now devising models grounded in the small-world effect to explain how the human brain works. These scientists are looking for small-world setups within the brain’s massive, interconnected cell networks and for moment-to-moment electrical manipulations that, they suspect, foster thinking and learning. Their efforts are a sharp departure from popular brain-imaging efforts to pinpoint neural niches that specialize in particular mental capabilities.

“Researchers have just begun to apply a huge arsenal of approaches to understanding how brain networks are patterned, how they evolve and grow, and how they generate dynamic structures,” says neuroscientist Olaf Sporns of Indiana University in Bloomington.

Fractal frequencies

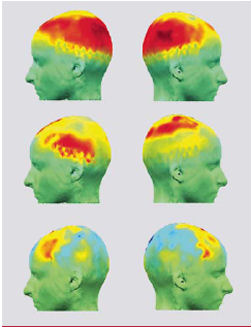

The 22 volunteers recruited by neuroscientist Danielle S. Bassett of the National Institute of Mental Health in Bethesda, Md., and her colleagues didn’t draw a tough assignment. Each participant simply lay under sensors that, at 275 points across the scalp, measured the magnetic field produced by neurons’ electrical discharges on the brain’s surface. Half watched a computer screen and tapped their right index fingers when a designated shape such as a square appeared. The rest saw the shapes but weren’t asked to do anything in response.

Their brains did plenty, though. Bassett’s team analyzed the six types of brain waves that showed up in all the participants. Each wave type crackled at a specific frequency, the result of millions of cells at various locations emitting synchronized signals.

From the electrical-activity associations that the researchers noted at pairs of scalp points, they constructed a simulated brain network. This provided an outline of which brain areas were working together at each of the six frequencies. It also revealed that at each frequency, brain networks exhibited a small-world arrangement. Clusters of closely grouped neural junctions typically incorporated a few connections to distant locations.

Intriguingly, each frequency-specific brain wave looked like all the others did, although it operated on a unique scale. Biological patterns that repeat in this way over different scales of measurement are known as fractals.

Fractal, small-world brain networks reverberate in an electrical limbo state that almost, but not quite, comes unglued, Bassett’s group reports in the Dec. 19, 2006 Proceedings of the National Academy of Sciences. Especially at higher frequencies, these networks operate “on the edge of chaos,” the researchers say. In that precarious condition, synchronized activity relegated to a small brain area can rapidly expand into far-flung neural regions to deal with new challenges or situations, the team proposes.

Although brain networks looked much the same whether volunteers tapped their fingers or did nothing, one notable difference emerged. During the tapping task, the networks delineated by the two highest frequencies of synchronized cell firing displayed novel long-distance connections between the frontal brain and an area toward the back of the brain. Since high-frequency, synchronized neural activity may foster perception, memory, and consciousness (SN: 11/13/04, p. 310: Available to subscribers at Synchronized Thinking: Brain activity linked to schizophrenia, skillful meditation), Bassett suspects that this neural response guided a participant’s decision to tap when shown the various visual cues.

Bassett and her colleagues now plan to identify the structures within the brain that hook up to the synchronized networks on the brain’s surface. The researchers will use functional magnetic resonance imaging (fMRI), a technique in which scanners measure neural blood-flow changes that reflect surges or declines in cell activity.

For now, remarks Sporns, the new findings indicate that related brain networks operate at different electrical frequencies, each of which acts as a unique channel for transmitting information. The brain needs no central-control mechanism to direct mental life; interactions within and among networks do the trick.

The possibility that the brain steeps itself in flexible, chaotic activity is “an attractive idea,” notes neuroscientist Karl Friston of University College London. He says that Bassett’s team now needs to formulate a theory of how low- and high-frequency synchronized networks collectively respond to mental challenges.

Perking up

The notion that the brain thrives on chaos, in a mathematical sense, comes as no shock to neuroscientist Walter J. Freeman of the University of California, Berkeley. For the past 20 years, he has argued that the brain churns out a cascade of chaotic electrical activity that serves as a “get ready” state. From there, he theorizes, vast expanses of brain tissue shift into electrical-activity patterns that organize thought and perception.

Freeman welcomes the network approach of Bassett and her colleagues. What’s critical, he says, is Bassett’s observation that brain waves look the same at different frequencies. In his view, this feature allows for split-second transformations from one synchronized network to another.

At the two highest network frequencies measured during the finger-tapping exercise, brain activity synchronized over an area that’s at least 22 centimeters long over the brain’s folded surface. Freeman has measured a comparably sized area of high-frequency synchronization in rabbits and other laboratory animals as they perform tasks.

“This distance is astonishing, considering that it covers most of each hemisphere containing several billion neurons,” Freeman says. “Explaining the large-scale reorganization of human brain activity is the central [neuroscience] issue of our day.”

With mathematicians Robert Kozma of the University of Memphis (Tenn.) and Bela Bollobás of the University of Cambridge in England, Freeman has developed a model of how clusters of neurons generate chaotic activity in brains at rest. In that model, when an individual searches for a memory or performs other mental work, synchronized brain states of increasing frequency emerge in rapid-fire fashion. As in Bassett’s study, each state produces, on its own scale, the same pattern of electrical activity.

Each brain state goes through three steps, Freeman suggests. Synchronization first emerges among individual neurons. It then spreads to interconnected populations of neurons. Finally, large neural structures with specific duties begin to reverberate in unison on each side of the brain.

This approach to modeling brain function, which Freeman’s group has dubbed neuropercolation, incorporates a small-world network into other neural features. For instance, the model includes some nodes that depress the activity of surrounding nodes and others that excite their neighbors, much as the brain contains cells that specialize in inhibiting or arousing each other.

Neuropercolation builds on the mathematics of percolation theory, which has long been used to model the sudden, exponential spread of forest fires and viruses. Recent research by Freeman’s group appears in the March 2006 Clinical Neurophysiology.

Network approaches to the brain will dampen neuroscientists’ current passion for cordoning off patches of tissue presumed to specialize in various mental functions, Freeman asserts. He says that researchers err when they regard brightly colored neural spots in fMRI images as areas with unique responsibilities for a mental function being studied. These “great red spots” represent hubs of activity in larger, constantly shifting neural networks, he argues.

Both his group and Bassett’s team have found hubs of particularly intense activity within networks of synchronized brain cells. These hubs arise where neural connections are especially numerous.

However, fMRI investigators such as Friston still see value in the search for brain regions with specialized duties. Activity hubs probably integrate information shuttled in from other brain locations, Friston proposes.

Dark energy

Studies of brain activity, mostly with fMRI, have left neuroscientists with a puzzling discovery: The additional energy required for the brain to perform other mental tasks is extremely small compared with the energy that the brain expends as an individual does nothing at all. New models of brain networks offer clues to why the resting brain generates so much energy.

In the Nov. 24, 2006 Science, neuroscientist Marcus E. Raichle of Washington University School of Medicine in St. Louis refers to the brain’s intrinsic activity as “dark energy” because its functions remain mysterious. Studies indicate that fewer than 10 percent of neural connections ferry information from the external world, Raichle points out. This small proportion suggests that intrinsic activity carries out vital duties, he holds.

Raichle raises three possible explanations for the brain’s dark energy. It may in part stem from a person’s random thoughts and daydreams. Intrinsic activity might also emerge from neural efforts to balance the opposing signals of cells simultaneously trying to jack up and cool down brain activity. Or it could occur during an internal process of generating predictions about upcoming environmental demands and how to respond to them.

The fractal, small-world networks observed by Bassett’s team in resting volunteers, Sporns says, probably create the intrinsic activity that intrigues Raichle.

Freeman seconds that notion, emphasizing that chaotic network activity at rest reflects each individual’s past experiences and expectations of upcoming events. “You see what you expect or are trained to see, not what is there,” he says.

In Friston’s view, Bassett’s findings demonstrate the organized but flexible nature of intrinsic brain activity but not its purpose.

In an upcoming NeuroImage, neuroscientists Alexa M. Morcom and Paul C. Fletcher, both of the University of Cambridge, argue that intrinsic activity holds no special significance in the brain. They say that scientists know virtually nothing about thinking that occurs spontaneously and should stick to studies of brain responses during mental tasks.

Other researchers see much significance for background activity in promoting efficient thinking. For instance, a team led by neuroscientist Michael D. Greicius of Stanford University School of Medicine reported in 2004 that memory problems in people with mild Alzheimer’s disease coincide with unusually low amounts of intrinsic activity in several memory-related brain areas.

Whether or not the brain’s dark energy proves important, neuroscientists are increasingly confident that communication among the brain’s 100 billion neurons requires surprisingly few steps. It may well be a small world in there after all.