Scientists are fixing flawed forensics that can lead to wrongful convictions

Police lineups, fingerprinting and trace DNA techniques all need reform

Forensic science can help solve crimes, but some techniques and methods of analysis need improvement.

The Red Dress

Charles Don Flores has been facing death for 25 years.

Flores has been on death row in Texas since a murder conviction in 1999. But John Wixted, a psychologist at the University of California, San Diego, says the latest memory science suggests Flores is innocent.

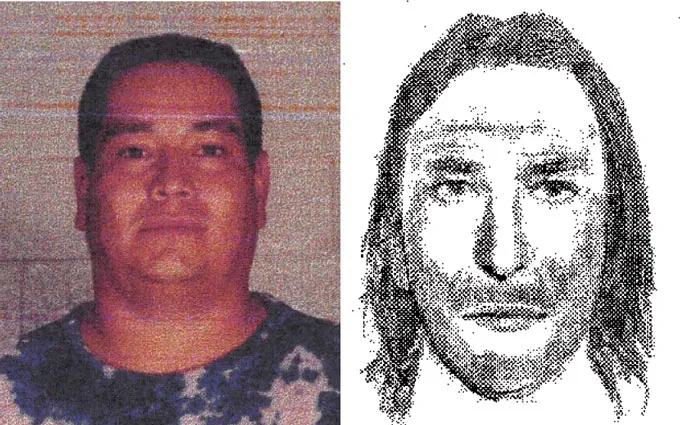

The murder Flores was convicted of happened during a botched attempt to locate drug money. An eyewitness, a woman who looked out her window while getting her kids ready for school, told the police that two white males with long hair got out of a Volkswagen Beetle and went into the house where the killing took place. The police quickly picked up the owner of the car, a long-haired white guy.

The police also suspected Flores, who had a history of drug dealing. He was also a known associate of the car owner, but there was a glaring mismatch with the witness description: Flores is Hispanic, with very short hair.

Still, the police put a “very conspicuous” photo of him in a lineup, says Gretchen Sween, Flores’ lawyer. “His [photo] is front and center, and he’s the only one wearing this bright-colored shirt, screaming ‘pick me!’”

But the eyewitness did not pick him. It was only after some time passed, during which the witness saw Flores’ picture on the news, that she came to think he was the one who entered the house. Thirteen months after she described two white men with long hair, she testified in court that it was Flores she saw.

Memory scientists have long been mistrustful of eyewitness reports because memory is malleable. But in recent years, Wixted says, research has shown that the initial lineup — the very first memory test — can be reliable. He argues that the witness’s initial rejection of Flores’ photo is evidence of innocence.

In 2013, Texas became the first state to introduce a “junk science” law allowing the courts to reexamine cases when new science warranted it. Sween has submitted hundreds of pages of arguments to get a judge to consider this new slant on memory science. So far, the Texas authorities have remained unconvinced.

“Our criminal justice system is generally slow to respond to any kind of science-based innovation,” laments Tom Albright, a neuroscientist at the Salk Institute for Biological Studies in La Jolla, Calif.

But researchers are pushing ahead to improve the science that enters the courtroom. The fundamental question for all forms of evidence is simple, Albright says. “How do you know what is right?” Science can’t provide 100 percent certainty that, say, a witness’s memory is correct or one fingerprint matches another. But it can help improve the likelihood that evidence is tested fairly or evaluate the likelihood that it’s correct.

“Our criminal justice system is generally slow to respond to any kind of science-based innovation.”

Tom Albright, neuroscientist

Some progress has been made. Several once-popular forms of forensics have been scientifically debunked, says Linda Starr, a clinical professor of law at Santa Clara University in California and cofounder of the Northern California Innocence Project, a nonprofit that challenges wrongful convictions. One infamous example is bite marks. Since the 1970s, a few dentists have contended that a mold of a suspect’s teeth can be matched to bite marks in skin, though this has never been proved scientifically. A 2009 report from the National Academy of Sciences noted how bite marks may be distorted by time and healing and that different experts often produce different findings.

More than two dozen convictions based on bite mark evidence have since been overturned, many due to new DNA evidence.

Now researchers are taking on even well-accepted forms of evidence, like fingerprints and DNA, that can be misinterpreted or misleading.

“There are a lot of cases where the prosecution contends they have forensic ‘science,’ ” Starr says. “A lot of what they’re claiming to be forensic science isn’t science at all; it is mythology.”

Do the eyes have it?

Though research has been clear about the shortcomings of eyewitness memory for decades, law enforcement has been slow to adopt best practices to reduce the risk of memory contamination, as appears likely to have happened in Flores’ case.

The justice system largely caught on to the problem of memory in the 1990s, when the introduction of DNA evidence overturned many convictions. In more than 3,500 exonerations tracked since 1989, there was some version of false identification in 27 percent of cases, according to the National Registry of Exonerations. What’s become clear is that an eyewitness’s memory can be contaminated, as surely as if someone spit into a tube full of DNA.

Over the last decade, expert groups, including the National Academy of Sciences and the American Psychological Association, have recommended that police investigators treat a lineup — usually done with photographs these days — like a controlled experiment. For example, the officer conducting the lineup should be “blind” as to which of the people is the suspect and which are “fillers” with no known link to the crime. That way, the officer cannot subconsciously influence the results.

Those best-practice recommendations are catching on, but slowly, says Gary Wells, a psychologist at Iowa State University in Ames who coauthored the American Psychological Association recommendations. “We continue to have cases popping up, sort of right and left, in which they’re doing it wrong.”

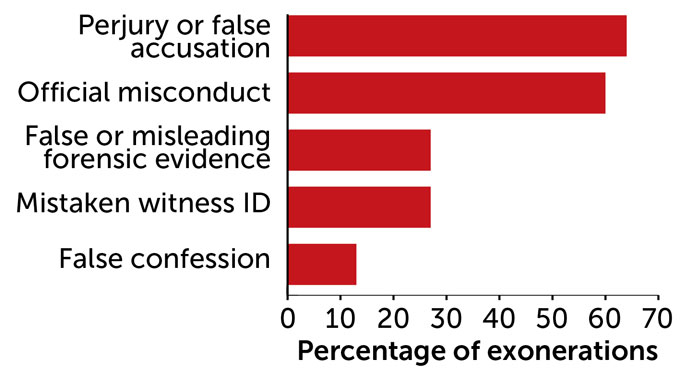

Exonerating circumstances

Of over 3,500 exonerations in the United States tracked since 1989, problems with forensic evidence and eyewitness testimony each played a role in about a quarter of the cases (exonerations can have multiple contributing factors, so the bars total more than 100 percent).

Factors contributing to exonerations since 1989

One problem arises when the filler photos don’t match the witness’s description of a suspect, or the suspect stands out in some way — as Sween contends happened with Flores. Fillers should all have the same features as the eyewitness described, and not be too similar or too different from the suspect. For now, it’s on police officers to engineer the perfect lineup, with no external check on how appropriate the fillers are.

As a solution, Albright and colleagues are working on a computer system to select the best possible filler photographs. The catch is that computer algorithms tend to focus on different facial features than humans do. So the researchers asked human subjects to rank similarity between different artificially generated faces and then used machine learning to train the computer to judge facial similarity the way people do. Using that information, the system can generate lineups — from real or AI-generated faces — in which all the fillers are more or less similar to the suspect. Next up, the scientists plan to figure out just how similar the filler faces should be to the suspect for the best possible lineup results.

Proper filler selection would help, but it doesn’t solve a key problem Wixted sees in the criminal justice system: He wants police and courts to appreciate a newer, twofold understanding of eyewitness testing. First, eyewitnesses who are confident in their identification tend to be more accurate, according to an analysis Wixted and Wells penned in 2017. For example, the pair analyzed 15 studies in which witnesses who viewed mock crimes were asked to report their confidence on a 100-point scale. Across those studies, the higher witnesses rated their own confidence, the more likely they were to identify the proper suspect. Accurate eyewitnesses also tend to make decisions quickly, because facial memory happens fast: “seconds, not minutes,” Wixted says.

Conversely, low confidence indicates the identification isn’t too reliable. In trial transcripts from 92 cases later overturned by DNA evidence, most witnesses who thought back to the first lineup recalled low confidence or outright rejection of all options, according to research by Brandon Garrett, a law professor at Duke University.

The confidence correlation is appropriate only when the lineup is conducted according to all best practices, which remains a rare occurrence, warns Elizabeth Loftus, a psychologist at the University of California, Irvine. But Wixted argues it’s still relevant, if less so, even in imperfect lineups.

The second recent realization is that the very first lineup a witness sees, assuming police follow best practices to avoid biasing the witness, has the lowest chance of contamination. So a witness’s memory should be tested just once. “There’s no do-overs,” Wixted says.

Together, these factors suggest that a confident witness on the first, proper lineup can be credible, but that low confidence or subsequent lineups should be discounted.

Those recommendations come mainly from lab experiments. How does witness confidence play out in the real world? Wells tested this in a 2023 study with his graduate student Adele Quigley-McBride, an experimental psychologist now on the faculty at Simon Fraser University in Burnaby, Canada. They obtained 75 audio recordings of witness statements during real, properly conducted lineups. While the researchers couldn’t be sure that the suspects were truly the criminals, they knew that any filler identification must be incorrect because those people were not connected to the crime in any way.

Face-to-face

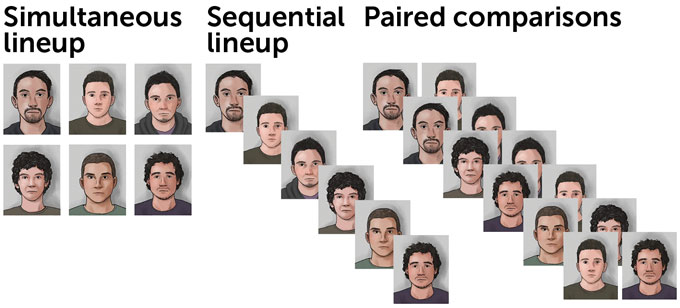

During a photo lineup, an eyewitness is shown a suspect’s photo and filler photos, either simultaneously or sequentially, and asked to ID the suspect. An alternative approach doesn’t ask a witness to make an identification. Instead, the witness views pairs of photos, some of which include the suspect and some of which don’t. For each pair, the witness judges which person looks more like the potential perp. The procedure ranks each lineup face to see if the suspect was overall judged as most similar to who the witness saw.

Volunteers listened to those lineup recordings and rated witness confidence. Witnesses who quickly and confidently picked a face — within about half a minute or less — were more likely to pick the suspect than a filler image. In one experiment, for example, the identifications that ended on a suspect had been rated, on average, as 69 percent confident, as opposed to about 56 percent for those that ended up on a filler. Quigley-McBride proposes that timing witness decisions and recording confidence assessments could be valuable information for investigators.

Witnesses also bring their own biases. They may assume that since the police have generated a lineup, the criminal must be in it. “It’s very difficult to recognize the absence of the perpetrator,” Wells says.

In a 2020 study, Albright used the science of memory and perception to design an approach that might sidestep witness bias, by not asking witnesses to pick out a suspect from a lineup at all. Instead, the eyewitness views pairs of faces one at a time. Some pairs contain the suspect and a filler; some contain two fillers. For each pair, the witness judges which person looks more like the remembered perpetrator. Based on those pairwise “votes,” the procedure can rank each lineup face and determine if the suspect comes out as most similar. “It’s just as good as existing methods and less susceptible to bias,” Albright says.

Wixted says the approach is “a great idea, but too far ahead of its time.” Defense attorneys would likely attack any evidence that lacks a direct witness identification.

Putting fingerprints to the test

Fingerprints have been police tools for a long time, more than a century. They were considered infallible for much of that history.

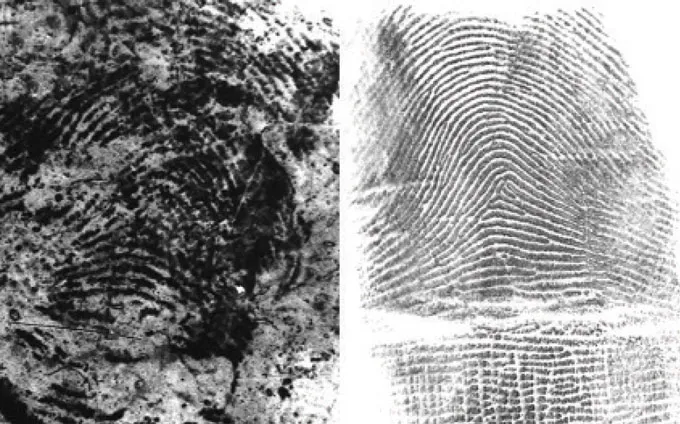

Limitations to fingerprint analysis came to light in spectacular fashion in 2004, with the bombing of four commuter trains in Madrid. Spanish police found a blue plastic bag full of detonators and traces of explosives. Forensic experts used a standard technique to raise prints off the bag: fumigating it with vaporized superglue, which stuck to the finger marks, and staining the bag with fluorescent dye to reveal a blurry fingerprint.

Running that print against the FBI’s fingerprint database highlighted a possible match to Brandon Mayfield, an Oregon lawyer. One FBI expert, then another, then another confirmed Mayfield’s print matched the one from the bag.

Mayfield was arrested. But he hadn’t been anywhere near Madrid during the bombing. He didn’t even possess a current passport. Spanish authorities later arrested someone else, and the FBI apologized to Mayfield and let him go.

The case highlights an unfortunate “paradox” resulting from fingerprint databases, in that “the larger the databases get … the larger the probability that you find a spurious match,” says Alicia Carriquiry. She directs the Center for Statistics and Applications in Forensic Evidence, or CSAFE, at Iowa State University.

In fingerprint analyses, the question at hand is whether two prints, one from a crime scene and one from a suspect or a fingerprint database, came from the same digit (SN: 8/26/15). The problem is that prints lifted from a crime scene are often partial, distorted, overlapping or otherwise hard to make out. The expert’s challenge is to identify features called minutiae, such as the place a ridge ends or splits in two, and then decide if they correspond between two prints.

Studies since the Madrid bombing illustrate the potential for mistakes. In a 2011 report, FBI researchers tested 169 experienced print examiners on 744 fingerprint pairs, of which 520 pairs contained true matches. Eighty-five percent of the examiners missed at least one of the true matches in a subset of 100 or so pairs each examined. Examiners can also be inconsistent: In a subsequent study, the researchers brought back 72 of those examiners seven months later and gave them 25 of the same fingerprint pairs they saw before. The examiners changed their conclusions on about 10 percent of the pairings.

Forensic examiners can also be biased when they think they see a very rare feature in a fingerprint and mentally assign that feature a higher significance than others, Quigley-McBride says. No one has checked exactly how rare individual features are, but she is part of a CSAFE team quantifying these features in a database of more than 2,000 fingerprints.

Computer software can assist fingerprint experts with a “sanity check,” says forensic scientist Glenn Langenburg, owner of the consulting firm Elite Forensic Services in St. Paul, Minn. One option is a program known rather informally as Xena (yes, for the television warrior princess) developed by Langenburg’s former colleagues at the University of Lausanne in Switzerland.

Xena’s goal is to calculate a likelihood ratio, a number that compares the probability of a fingerprint looking like it does if it came from the suspect (the numerator) versus the probability of the fingerprint looking as it does if it’s from some random, unidentified individual (the denominator). The same type of statistic is used to support DNA evidence.

To compute the numerator probability, the program starts with the suspect’s pristine print and simulates various ways it might be distorted, creating 700 possible “pseudomarks.” Then Xena asks, if the suspect is the person behind the print from the crime scene, what’s the probability any of those 700 could be a good match?

To calculate the denominator probability, the program compares the crime scene print to 1 million fingerprints from random people and asks, what are the chances that this crime scene print would be a good match for any of these?

If the likelihood ratio is high, that suggests the similarities between the two prints are more likely if the suspect is indeed the source of the crime scene print than if not. If it’s low, then the statistics suggest it’s quite possible the print didn’t come from the suspect. Xena wasn’t available at the time of the Mayfield case, but when researchers ran those prints later, it returned a very low score for Mayfield, Langenburg says.

Another option, called FRStat, was developed by the U.S. Army Criminal Investigation Laboratory. It crunches the numbers a bit differently to calculate the degree of similarity between fingerprints after an expert has marked five to 15 minutiae.

While U.S. Army courts have admitted FRStat numbers, and some Swiss agencies have adopted Xena, few fingerprint examiners in the United States have taken up either. But Carriquiry thinks U.S. civilian courts will begin to use FRStat soon.

Trace DNA makes for thin evidence

When DNA evidence was first introduced in the late 20th century, courts debated its merits in what came to be known as the “DNA wars.” The molecules won, and DNA’s current top status in forensic evidence is well-deserved — at least when it’s used in the most traditional sense (SN: 5/23/18).

Forensic scientists traditionally isolate DNA from a sample chock-full of DNA, like bloodstains or semen from a rape kit, and then focus in on about 20 specific places in the genomic sequence. These are spots where the genetic letters repeat like a stutter, such as GATA GATA GATA. People can have different numbers of repeats in each spot. If the profiles are the same between the suspect and the crime scene evidence, that doesn’t confirm the two people are one and the same. But because scientists have examined the stutter spots in enough human genomes, they can calculate a likelihood ratio and testify based on that.

So far, so good. That procedure can help juries answer the question, “Whose DNA is this?” says Jarrah Kennedy, a forensic DNA scientist at the Kansas City Police Crime Laboratory.

But in recent years, the technology has gotten so sensitive that DNA can now be recovered from even scant amounts of biological material. Forensic scientists can pluck a DNA fingerprint out of just a handful of skin cells found on, say, the handle of a gun. Much of Kennedy’s workload is now examining this kind of trace DNA, she says.

“Human people do this work, and human people make mistakes and error.”

Tiffany Roy, forensic DNA expert

The analysis can be tricky because DNA profiles from trace evidence are less robust. Some stutter numbers might be missing; contamination by other DNA could make extra ones appear. It’s even more complicated if the sample contains more than one person’s DNA. This is where the examiner’s expertise, and opinions, come into their assessments.

“Human people do this work, and human people make mistakes and errors,” says Tiffany Roy, a forensic DNA expert and owner of the consulting firm ForensicAid in West Palm Beach, Fla.

And even if Roy or Kennedy can find a DNA profile on trace evidence, such small amounts of DNA mean they haven’t necessarily identified the profile of the culprit of a crime. Did the suspect’s DNA land on the gun because they pulled the trigger? Or because they handled the weapon weeks before it ever went off?

“It’s not about the ‘who?’ anymore,” Kennedy says. “It’s about ‘how?’ or ‘when?’ ”

Such DNA traces complicated the case of Amanda Knox, the American exchange student in Italy who was convicted in 2009, with two others, of sexually assaulting and killing her roommate. DNA profiles from Knox and her boyfriend were found on the victim’s bra clasp and a knife handle. But experts later deemed the DNA evidence weak: There was a high risk the bra clasp had been contaminated over the weeks it sat at the crime scene, and the signal from the knife was so low, it may have been incorrect. The pair were acquitted, upon appeal, in 2015.

Here, again, statistical software can help forensic scientists decide how many DNA profiles contributed to a mixture or to calculate likelihood ratios. But Roy estimates that only about half of U.S. labs use the most up-to-date tools. “It keeps me awake at night.”

And Roy suspects the courts may at some point have to consider whether science can inform how a person’s DNA got on an item. Thus, she says, “I think there’s a new DNA war coming.” She doesn’t think the science can go that far.

When science saves the day

Change happens slowly, Wixted says. And Flores and others remain incarcerated despite efforts by Sween and others questioning faulty evidence.

One reason U.S. courts often lag behind the science is that it’s up to the judge to decide whether any specific bit of evidence is included in a trial. The federal standard on expert testimony, known as Rule 702 and first set out in 1975, is generally interpreted to mean that judges must assess whether the science in question is performed according to set standards, has a potential or known error rate, and has been through the wringer of scientific peer review. But in practice, many judges don’t do much in the way of gatekeeping. Last December, Rule 702 was updated to reemphasize the role of judges in blocking inappropriate science or experts.

In Texas, Sween says she’s not done fighting for Flores, who’s still living in a six-by-nine-foot cell on death row but has graduated from a faith-based rehabilitation program and started a book club with the help of someone on the outside. “He’s a pretty remarkable guy,” Sween says.

But in another case Wixted was involved with, the new memory science led to a happier ending.

Miguel Solorio was arrested in 1998, suspected of a drive-by shooting in Whittier, Calif. His girlfriend — now wife — provided an alibi. Four eyewitnesses, the first time they saw a lineup, didn’t identify him. But the police kept offering additional lineups, with Solorio in every one. Eventually, two witnesses identified him in court. He was convicted and sentenced to life in prison without parole.

When the Northern California Innocence Project and the Los Angeles County District Attorney’s Office took a fresh look at the case, they realized that the eyewitnesses’ memories had been contaminated by the repeated lineups. The initial tests were “powerful evidence of Mr. Solorio’s innocence,” the district attorney wrote in an official concession letter.

Last November, Solorio walked out of prison, a free man.

Investigating crime science

Some forensic techniques that seem scientific have been criticized as subjective and had their certainty questioned. That doesn’t necessarily mean they are never brought into court or that they’re meritless. For some techniques, researchers are studying how to make them more accurate.

Hair analysis

Experts judge traits such as color, texture and microscopic features to see if it’s possible a hair came from a suspect, but not to make a direct match. Analysis of DNA from hair has largely supplanted physical examination. But if no root is present, authorities won’t be able to extract a complete DNA profile. Scientists at the National Institute of Standards and Technology are analyzing whether certain hair proteins, which vary from person to person, can be correlated with a suspect’s own hair protein or DNA profile.

Fire scene investigation

Fire investigators once thought certain features, such as burn “pour patterns,” indicated an arsonist used fuel to spur a fast-spreading fire. In fact, these and other signs once linked to arson can appear in accidental fires, too, for example due to high temperatures or water from a firefighter’s hose. A 2017 report from the American Association for the Advancement of Science said identifying a fire’s origin and cause “can be very challenging and is based on subjective judgments and interpretations.”

Firearms analysis

A gun’s internal parts leave “toolmarks” on the bullet. Examiners study these microscopic marks to decide whether two bullets probably came from the same gun. A 2016 President’s Council of Advisors on Science and Technology report said the practice “falls short of the scientific criteria for foundational validity.” In 2023, a judge ruled for the first time that this kind of evidence was inadmissible. Researchers at NIST and the Center for Statistics and Applications in Forensic Evidence, or CSAFE, are developing automated, quantifiable methods to improve objectivity.

Bloodstain pattern analysis

Experts examine blood pooled or spattered at a crime scene to determine the cause, such as stabbing, and the point of origin, such as the height the blood came from. While some of this is scientifically valid, the analysis can be complex, with overlapping blood patterns. In 2009, the National Academy of Sciences warned that “some experts extrapolate far beyond what can be supported.” CSAFE has compiled a blood spatter database and is working on more objective approaches.