Want to spot a deepfake? The eyes could be a giveaway

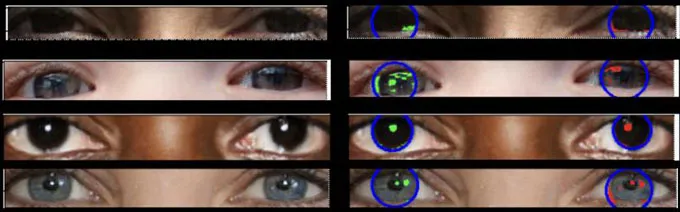

A technique from astronomy could reveal reflection differences in AI-generated people’s eyeballs

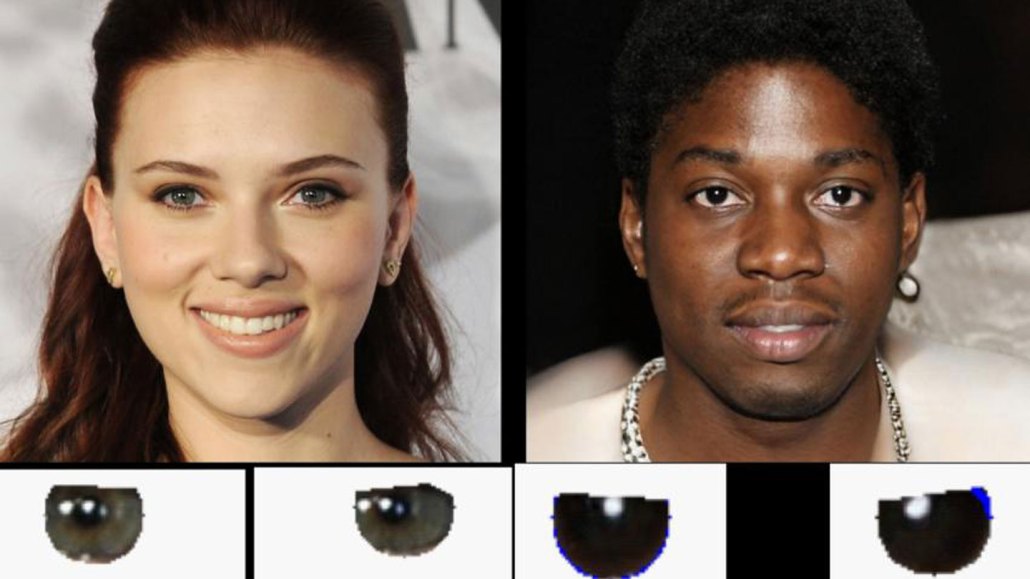

Reflections in the eyeballs of these images reveal that the one on the right is AI-generated, while the image on the left is a real photo (of the actress Scarlett Johansson).

Adejumoke Owolabi