A British company called Shazam is making big bucks off the mathematical analysis of music. Avery Wang, one of the company’s founders, recently met with me in a coffee shop in California to explain how his company is doing it.

Wang wasted no time before beginning a demonstration. He put down his coffee cup and held his cell phone in the air, far from his ear. He looked like someone who had somehow missed the last ten years and hadn’t a clue about the basic function of the gadget in his hand.

“Let’s see what’s playing,” he said. The light jazz playing in the coffee shop was almost drowned out by the forced hiss of the espresso machine and the caffeine-charged chatter around us. A bar on the cell phone counted down 10 seconds, and then some text appeared on the tiny screen.

“Esbjorn Svensson Trio, Strange Place for Snow,” he said, holding the cell phone close enough to read the song title on the display. “Ever heard of them?” I shook my head. “Me neither,” he said.

Six million people now use Shazam’s software to identify, and sometimes purchase, music they hear while walking down the street, sitting in a café, or doing just about anything else. Wang is the engineer who designed the system to recognize music picked up though a cell phone.

The service had a precarious start, Wang told me. Three MBAs recruited him to figure out how to make the technical details work while they raised the money. “I thought it was kind of ridiculous,” Wang said. “This was backward from how things are usually done. You usually have technology before you start selling it.”

They made a plan for the cell phone to pick up the audio and send it to Shazam. Wang’s program would somehow match the signal it received with one of the songs stored in its database. But when Wang heard the quality of the recordings that came through on the cell phone, he almost despaired. Some of the recordings were of such poor quality that he could hardly tell whether any music was playing at all, much less what it was. “I thought, ‘This is not a solvable problem!'” Wang told me.

Background noises are exactly what cell phones don’t want to capture. The software that runs cell phones tries to filter out as much of that as possible, and zoom in on the voice. Whatever bits of the music a phone does capture, it then compresses, sacrificing sound fidelity.

Furthermore, Wang needed to find an algorithm that would allow the program to search through a very large database quickly, rarely misidentify a song, and be able to identify the song based on any passage that happens to be playing.

After three months, he hadn’t made any progress. Meanwhile, his MBA partners were raising millions of dollars for the project. Wang was wondering how he was going to break the bad news to them and pondering what his next job might be, when suddenly he happened upon the breakthrough they’d all been counting on.

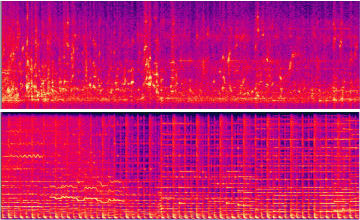

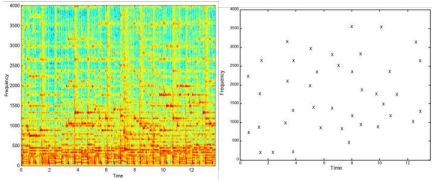

He was analyzing the music’s spectrogram, which is a graph that shows the intensity of the sound wave at each frequency over time. He found that the energy peaks, also called spectral peaks, showed up in both the original song and the version he received over the cell phone.

The spectral peaks aren’t something you directly perceive in the music, Wang explained. “Your ear is a spectral analyzer, so it is what you hear. But your brain doesn’t look at sound in terms of spectral peaks. You don’t hear the individual waves. Instead you hear the ensemble of all the partial waves. The ensemble together gives you the perception of a tone.”

Nevertheless, the peaks stood out on Wang’s spectrograms as red dots, and he was able to line them right up. To speed up the program and increase its robustness, he used a standard technique from computer science known as “hashing,” where he compares pairs of spectral peaks from each version. In many of the pairs of peaks taken from the signal sent by cell phone, at least one peak came from noise. But he found that he could identify the piece even if only 5% of the pairs came from the original music.

It’s hard to quantify the reliability of this system, Wang said, because it depends so much on the amount of noise, the strength of the signal, and the loudness of the original music. But it often performs better than the human ear, and while it sometimes can’t find a match at all, it almost never falsely identifies a piece.

As Wang and I carried on chatting in the coffee shop, he continued to use Shazam to identify songs. We could hear the voice of a woman cheerily singing “It’s a good day,” and Shazam identified her as Peggy Lee. Shazam also told us that the person singing a bubbly jazz song called “I’ll Be Seeing You” was, of all people, the punk-edged rocker Iggy Pop.

Each new song presented a puzzle, and the cell phone would have the answer. I started getting hooked.

If you would like to comment on this article, please see the blog version.